In recent years, artificial intelligence (AI) has rapidly grown in importance, playing a major role in the lives of people and the functioning of organizations, transforming everyday activities and business operations alike. While the use of AI systems can be beneficial in a number of areas, it has raised many concerns and provoked discussions around its regulation.

In the face of those challenges, the European Parliament has adopted a regulation on artificial intelligence, known as the AI Act, which is the first conclusive and concrete piece of legislation seeking to regulate the use of AI systems across different markets, including the already highly regulated banking sector, where financial and personal information must be responsibly handled and efficiently protected.

What is the European AI Act and what is its significance in banking? These are the questions that this article will attempt to answer.

There are a number of banking processes that currently utilize AI and will likely continue to employ those technologies in the future. Among those, the following can be identified:

In those processes, language processing models are utilized in order to analyze and systemize large volumes of data in ways that are generally quicker and more precise as opposed to manual processing. Therefore, all of the above fall under the purview of the AI Act.

AI can be used for a number of purposes, from entertainment to highly-specialized business applications. Due to the fact that it has the potential to imitate, simulate, and augment reality, its unethical use is associated with a number of risks, and can result in deceptive media (deepfakes), algorithmic bias, and privacy violations. To prevent those threats, clear guidelines on responsible AI distribution and consumption need to be established.

The AI Act defines an AI system as a machine-based system designed to operate at different levels of autonomy. Its other characteristics include adaptability after deployment and the ability to infer how to generate results based on inputs. These results can take the form of predictions, content, recommendations, or decisions affecting the physical or virtual environment.

At the same time, the act does not define an AI model. It only mentions “general-purpose AI models,” which are trained on large data sets and can perform a wide range of different tasks.

The act notes that determining whether a piece of software is an AI system should be based on an assessment of its architecture and functionality, taking into account the seven elements of the definition:

The above conditions may occur selectively at the stage of building or using the system.

Modeling methods are mathematical and algorithmic techniques for representing real processes in the form of models that enable their analysis, prediction, or optimization. Depending on the approach, they can be based on rules and formulas or on data and self-learning.

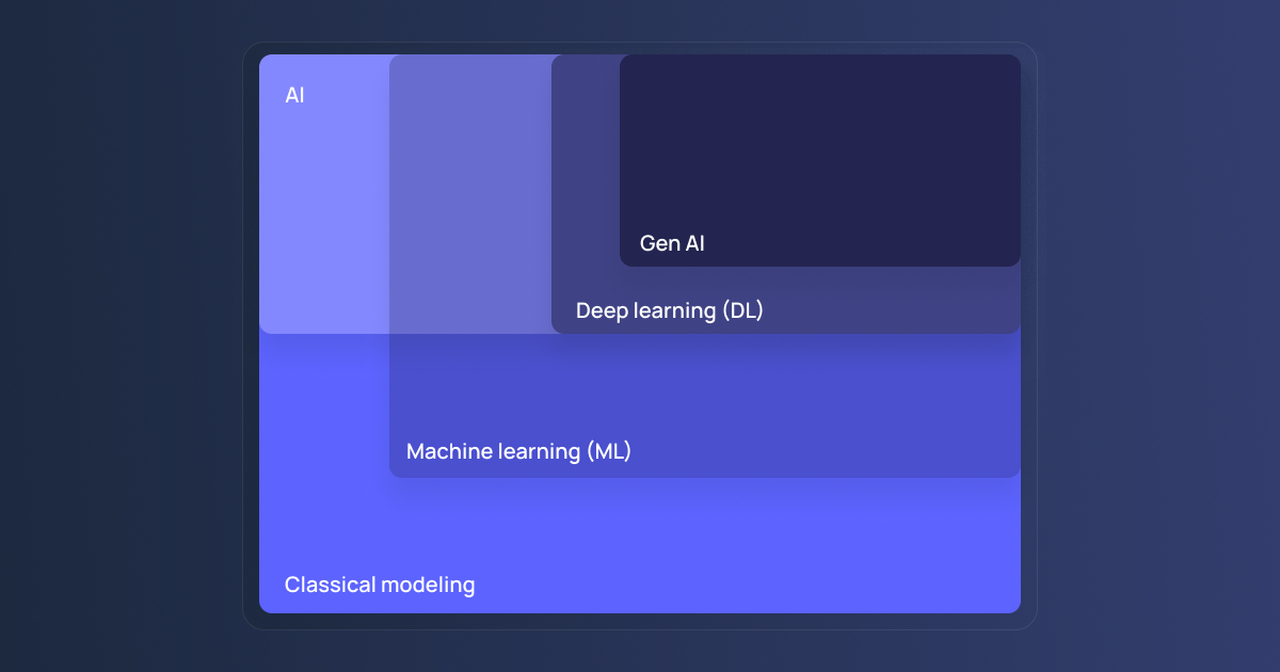

The following simplified classification is based on the April 2025 summary of the activities of the AI working group at the Banking Technologies Forum.

Classical modeling follows fixed rules and formulas created by humans, while artificial intelligence is based on algorithms with a learning component for creating systems capable of performing tasks that typically require human intelligence.

Classification of modeling methods

Machine learning (ML) methods partially fit into both of the above categories, but their classification will not always be straightforward. While traditional ML models, such as linear regression or decision trees, are based on fixed mathematical rules and specific logic, there are more sophisticated methods that learn patterns based on feedback.

Deep learning (DL) methods are a subset of machine learning. They use multilayer neural networks to analyze complex patterns and large data sets. They are used in audio analysis, image recognition, or natural language processing (NLP).

Generative artificial intelligence (GenAI) is a subset of deep learning that includes methods that learn from patterns drawn from large data sets to generate similar content, such as images, videos, or sounds.

Each of the above modeling methods has different capabilities, applications, and limitations. The appropriate classification of AI systems allows them to be used efficiently and responsibly, helping reduce potential security risks.

The AI Act seeks to provide for the safe use of artificial intelligence with the simultaneous preservation of fundamental human rights. At the same time, another objective of this regulation is to stimulate innovation and encourage investment in AI. It is therefore clear that the act is not designed to prohibit or limit the use of AI, but instead encourage its responsible application.

This regulation defines the different parties involved in AI application, from providers through deployers, importers, and distributors to manufacturers, and its provisions apply to all entities that fulfill at least one of the below requirements:

It is important to note that the EU AI Act is “horizontal” in its scope, which means that instead of addressing individual industries such as the banking sector or specific types of AI systems, the regulation has a broad application focusing on the use of artificial intelligence in different areas and territories. In this way, it resembles the GDPR and DORA legislations, as those are also general in nature.

AI systems are now used for a variety of different purposes, and so, to regulate their utilization, the European legislator has organized the application of different regulations based on tiers expressing the level of risk associated with a tool or its usage.

Minimal or no risk. Although no mandatory requirements are prescribed for this category, the AI Act encourages that all parties involved in the production, distribution, and deployment of minimal-risk AI systems adhere to relevant codes of conduct.

Levels of risk

In this way, the EU AI Act covers a variety of different systems and solutions utilized across a multitude of industries, providing guidance on their safe and responsible use.

While classifying AI-based systems in terms of the risks involved, it is necessary to look carefully at the context in which a given solution is to be used. Here, it will be necessary to analyze what impact it may have on individuals and their rights, taking into account the risk of potential harm.

Practices prohibited under the AI Act regulations include profiling individuals to predict or assess their risk of committing a crime, categorizing individuals based on their biometric data for sensitive characteristics, and drawing inferences about an individual’s emotions based on biometric data.

Among the prohibited practices one can also identify the use of manipulation or subliminal techniques to take advantage of the vulnerability of individuals or groups of individuals based on their age, disability, or social or economic situation. A relevant example of an AI system that might be used in banking could be a system for personalizing financial offers using biased data; for instance, a system basing processes on information about customer age groups could offer financial products on unfavorable terms to the elderly, causing them financial harm. It is therefore important to ensure that the data and tools utilized by banks in AI-assisted processes are used responsibly and appropriately for the intended purposes.

Among mechanisms banned by the AI Act that are less likely to find application in banking, social scoring and untargeted scraping can be listed.

The vast majority of obligations under the AI Act apply to high-risk systems, a category into which most artificial intelligence applications in banking also fall.

Among the criteria for classifying AI systems as high-risk solutions one can list the use of remote biometric identification systems, including AI tools used for biometric categorization based on sensitive characteristics. In the area of employment and employee management, systems used in recruitment processes or for the purpose of assigning tasks to bank employees will also fall into this category. Another application of AI systems in this category concerns ensuring the security of critical digital infrastructure.

In terms of serving the bank’s customers, among high-risk systems we will find tools used to assess creditworthiness and, less frequently, tools used to assess insurance risk. An example is a real-time credit risk monitoring system, which, through self-adaptation based on new data, updates assessment parameters and makes decisions based on the results generated.

The AI Act has also provided for the establishment of the European AI Office, an organization overseeing the adoption of the act’s provisions that comprises representatives of EU member states. The Office is tasked with fostering the development and responsible use of AI technology while ensuring protection against associated risks. Its responsibilities include enforcing rules of conduct for general-purpose AI models. The institution also fulfills an advisory role, assisting the governing bodies of member states in carrying out their tasks under the AI Act.

To a certain extent, the supervision and enforcement of the AI Act is to be entrusted to bodies responsible for a given industry. In the case of banking and financial services, these will be the relevant financial services authorities in the member states, together with the European Supervisory Authorities (ESAs): the European Banking Authority (EBA), the European Securities and Markets Authority (ESMA), and the European Insurance and Occupational Pensions Authority (EIOPA).

Given its prescriptive character, immediately implementing measures ensuring adherence to the AI Act would generally not be possible for most organizations. For this reason, its implementation has been phased in over a three-year period.

There are many opportunities and process optimization possibilities associated with the efficient use of artificial intelligence in banking. At the same time, its irresponsible and incompetent use can carry serious consequences. It is therefore important for financial institutions to be able to identify risks associated with the implementation of modern AI-based solutions and prevent them.

The skillful interpretation of regulations and applications for the AI Act in banking is a fundamental step toward ensuring regulatory compliance, enhancing the security and transparency of AI systems, minimizing legal risks, and building customer trust in technologies used in financial services.